Sixteen Years of Python Performance

Python 3.0 was released 16-years ago this December. I loved it right away. The syntax was clean and its Unicode model was fantastic - that was a big pain-point for me at the time. Not everybody felt the same way. The grumblers complained about the lack of backwards-compatibility and the poor performance. They had a point then, but do they still have a point now?

Performance by Version

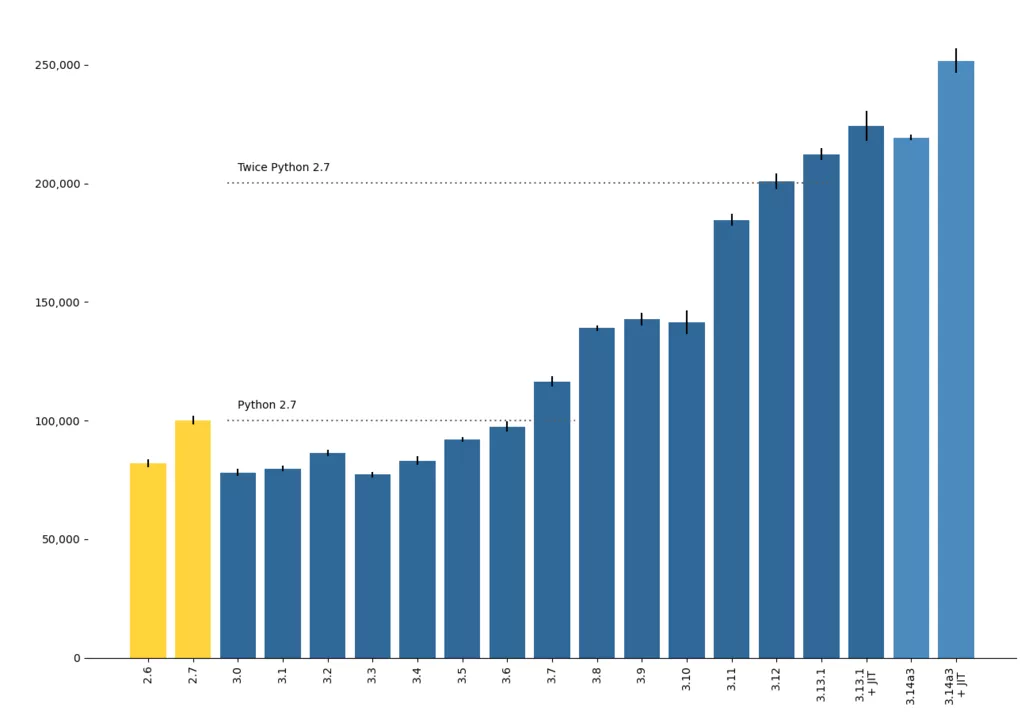

Let's start at the end, and show the results right away. You can see that Python 2.7 was indeed a fast release - and that the grumblers were right. Early versions of Python 3 were not very efficient, and they stayed slow for quite a few releases.

With pleasing symmetry, we finally passed Python 2.7 with Python 3.7, released back in 2018 and shipped with Debian 10 (Buster).

Python 3.12 saw us double Python 2's best effort, and the optional JIT shipped with the soon-to-be-released Python 3.13 squeezes out a few more percentage points. We're going great!

Detailed Results

The benchmark I used is the silly solitaire Snakes & Ladders benchmark I've been using for many years. It creates loads of tuples and lists and does lots of dictionary look-ups. I had to create a simplified version of the script that runs on all of these Python versions.

I tweaked the number of iterations so that run of the script took 20-60 seconds, then for each build of Python I ran 10 iterations using the hyperfine benchmark runner, plus one warm-up run which was ignored.

| Python Version | Games per second | Std. dev. |

| 2.6.9 | 81,924 | 1,655 |

| 2.7.18 | 100,138 | 1,769 |

| 3.0.1 | 78,160 | 1,613 |

| 3.1.5 | 79,736 | 1,162 |

| 3.2.6 | 86,383 | 1,207 |

| 3.3.7 | 77,193 | 1,268 |

| 3.4.10 | 83,097 | 1,816 |

| 3.5.10 | 91,988 | 1,032 |

| 3.6.15 | 97,531 | 2,306 |

| 3.7.17 | 116,547 | 2,206 |

| 3.8.18 | 138,947 | 1,205 |

| 3.9.18 | 142,735 | 2,604 |

| 3.10.12 | 141,423 | 5,128 |

| 3.11.5 | 184,631 | 2,563 |

| 3.12.4 | 200,844 | 3,405 |

| 3.13rc1 | 205,381 | 3,088 |

| 3.13rc1 + JIT | 218,255 | 3,268 |

| 3.13.1 | 212,323 | 2,552 |

| 3.13.1 + JIT | 224,245 | 6,276 |

| 3.14a3 | 219,375 | 1,136 |

| 3.14a3 + JIT | 251,750 | 5,121 |

Building 17 Pythons

To be as fair as possible, I built every version of Python tested here from source. I used the same compiler, the same computer, and the 'best' settings supported. For reasons I'll soon get into, it was by far-and-away the most painful part of this project!

|

Python Version |

Configuration options |

|

2.6 |

./configure |

| 2.7 | ./configure --enable-optimizations |

| 3.0 |

./configure |

|

3.1 to 3.3 |

Manually patch code to fix segfault, then: ./configure |

| 3.4 | ./configure |

| 3.5 to 3.13 | ./configure --enable-optimizations --with-lto |

|

3.13 + JIT |

./configure --enable-optimizations --with-lto --enable-experimental-jit |

Problem building versions 3.1 to 3.3

This project was an interesting piece of code archaeology. I kept getting mysterious segfaults after building early Python 3 versions from source on my 64-bit Linux system using modern C/C++ compilers (specifically GCC 13.2). So much trouble that versions 3.1 to 3.3 were left off the chart until the last minute.

For a long time I thought it was an SSL version problem (there's a story there too), but it finally turned out to be a subtle alignment problem in PyGC_Head, declared in Include/objimpl.h

This is the type of most Python variables and is a union containing a struct (dark magic indeed!) or a dummy value. The type of that dummy value changed back in 2012, in the single-line commit e348c8d1, from a long double to a plain double. Making that same change on the old source code fixed the problem with Python segfaulting immediately. Apparently there was a compiler warning back then that prompted the fix. I'm sure I was warned too, but building these old source packages manually I was swamped with compiler warnings, so there was no way I'd find it.

The lesson here is that you have to keep building your code and reading those warning messages: -Wall is your friend and unions are dangerous!

Closing Thoughts

Using a child's game as a Python benchmark is a reminder that benchmarks are silly. The performance of your own code is what's important. This is especially true in Python, as so much of what we think of as our code is actually running highly-tuned modules written in C or C++, or recently even Rust.

That said, the performance gains we've seen in the last half-decade or so have been very welcome indeed. They have been the result of countless engineer-hours, for which we should be suitably thankful. It has been tremendous to see these gains version by version.

Lastly, if your Python program needs a little more raw CPU performance, there are ways forward that don't required waiting for a new Python version: First, try running your program under PyPy. After that, use all of your systems CPU cores with something like ProcessPoolExecutor from the standard library's concurrent.futures module. Lastly, find the biggest hot-spot in your program and replace just that code with a module written in a compiled language. I recently did this again using Rust and I was very impressed by how easy it has gotten.

Updated 10 Feb 2025, first published 9 Sep 2024